Protecting Your Audience: How Real-Time Content Moderation Safeguards Live Translations

In many churches, schools, companies and public events, the decision to adopt AI-powered transcription and translation comes with a difficult question: what happens if something inappropriate is said on the microphone?

AI models are designed to be accurate, not selective. If they hear a word, they will faithfully transcribe and translate it — including profanity, offensive expressions or sensitive terms. For organizations that rely on trust, reputation and a safe environment for all ages, this is a real risk, not a theoretical concern.

Real-time content moderation is therefore not just a technical feature. It becomes a necessary layer of protection for live and recorded communication.

The Risk of Unfiltered AI Transcription and Translation

Live speech is unpredictable by nature. A speaker may:

- quote a story that includes strong language,

- mention a term that is acceptable in one culture but offensive in another,

- read a comment or question submitted by the audience,

- or be interrupted by background noise with inappropriate content.

In a purely “raw” AI pipeline, every word spoken into the microphone can end up on the screen as text, and in the listener’s ear as translated audio. Once that happens, the damage is done: the audience has seen or heard something that the organization never intended to endorse.

For churches and faith communities, this can conflict with the values they want to preserve in a public service. For schools, it can create issues with parents and administration. For companies and event organizers, it can affect brand perception, compliance and trust.

A Real-World Scenario: When a Live Event Goes Wrong on Screen

Consider a church that is using an AI-powered system to provide English translation for a service originally preached in Portuguese. The pastor shares a testimony involving a person who, in a moment of anger, insults a colleague using strong language. The pastor quotes the original sentence to illustrate the transformation that occurred later in that person’s life.

From the speaker’s perspective, the focus is on the transformation. But from the system’s perspective, there is no context; there is only audio.

Without any content moderation, the profanity is:

- transcribed in the original language,

- translated into English,

- and, if text is being displayed, shown on a screen or on people’s phones.

In a matter of seconds, an expression that was meant only as part of a narrative appears as a highlighted element in the service — and possibly as spoken audio in another language as well.

Even if the intention was didactic, the impact can be negative, especially for first-time visitors or for people who are more sensitive to this type of language.

How Real-Time Content Moderation Works in Practice

To avoid this scenario, a content moderation layer is added directly into the transcription and translation pipeline.

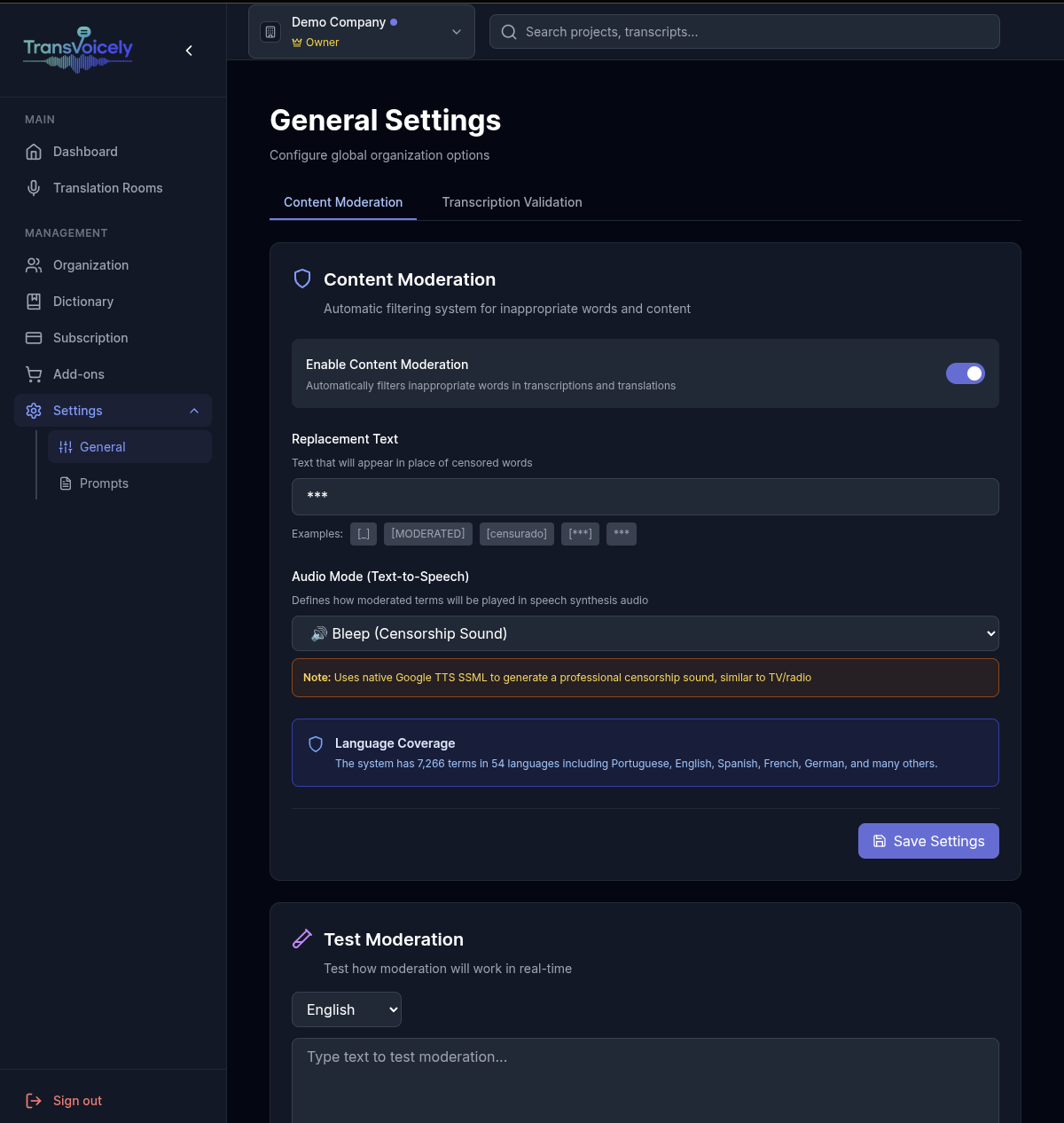

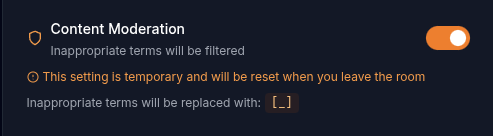

In platforms like TransVoicely, this typically involves:

- A global list of inappropriate terms, organized by language and category.

- Rules that define whether a word should be masked, replaced or blocked.

- Real-time processing of each transcribed segment before it is displayed or used as input for translation or TTS.

When a problematic word is detected:

- it can be replaced by a neutral marker (for example,

"***"), - or substituted with a more acceptable term, depending on the policy of the organization.

This ensures that the idea of the sentence can still be understood, but the explicit term does not appear in text or audio.

From the operator’s point of view, the process is transparent. There is no need to manually edit each phrase in real time. The moderation system works in the background, as an always-on safety filter.

Why This Matters for Churches, Schools and Public-Facing Organizations

For organizations that serve families, youth and the broader community, communication is not only about transmitting information; it is also about protecting an environment of respect and safety.

Real-time content moderation helps:

- align AI output with institutional values,

- prevent accidental exposure to offensive language,

- reduce the risk of complaints and misunderstandings,

- and reinforce the perception of care towards the audience.

In a multilingual setting, the importance is even greater. A single word spoken once can be propagated into multiple languages at the same time. Without moderation, the impact of a single incident multiplies.

Beyond Blacklists: The Role of Custom Dictionaries

In addition to generic lists of inappropriate terms, some platforms provide a custom dictionary feature that allows each organization to define its own corrections and preferences.

While the focus of this article is content moderation, it is worth noting that a custom dictionary can complement moderation by:

- correcting the spelling of specific names or terms,

- standardizing how internal ministries, departments or brands are written,

- and reducing the risk of misinterpretations caused by similar-sounding words.

This level of customization deserves its own dedicated discussion, but it reinforces a central idea: AI output can and should be adapted to the reality of each context.

Implementing Moderation Without Slowing Down the Flow

A common concern is whether content moderation will introduce latency or interrupt the natural rhythm of a live event. Properly implemented, it should not.

In a well-designed system:

- moderation is applied to each segment as soon as it is transcribed,

- the filter works in-memory, without complex external calls,

- and the result is delivered to the screen or TTS engine with minimal additional delay.

From the perspective of the speaker and the audience, the flow remains continuous. The only visible difference is that certain words never appear — which, in many environments, is exactly the goal.

Conclusion: Safety, Respect and Trust in Multilingual Communication

Real-time AI transcription and translation can significantly extend the reach of a message. However, extending reach also extends responsibility.

Content moderation ensures that this extended reach does not come at the cost of exposing audiences to language that contradicts the values or standards of the organization.

By combining powerful AI with thoughtful safeguards, platforms like TransVoicely allow churches, schools, companies and event organizers to reap the benefits of automation while maintaining control over what is actually seen and heard.

In a world where every word can be amplified instantly across languages, moderation is not a luxury — it is a necessary part of responsible communication.